DMOZ100k06

DMOZ100k06 is a large research data set about document metadata based on a random sample of 100,000 web documents from the Open Directory combined with data retrieved from Delicious.com/Yahoo!, Google, and ICRA.

This web page describes the DMOZ100k06 data set as published in my paper Authors vs. Readers: A Comparative Study of Document Metadata and Content in the WWW at the 7th International ACM Symposium on Document Engineering, Canada, August 2007.

The Data Set

This section gives you a short overview of DMOZ100k06. The corpus is described in detail in my paper Authors vs. Readers: A Comparative Study of Document Metadata and Content in the WWW, for which the corpus was built. The paper includes both a quantitative and qualitative analysis of DMOZ100k06.

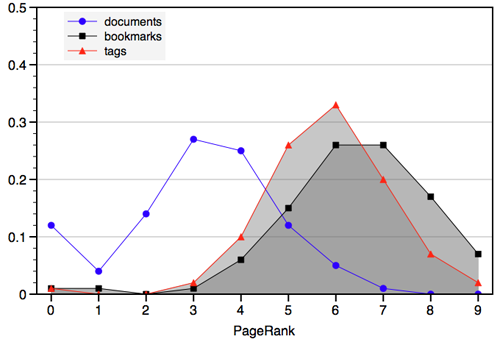

| Overview | Total | Comment |

|---|---|---|

| documents* | 97,578 | |

| bookmarks | 180,246 | |

| bookmarked documents | 13,771 | 14.1 % |

| (common) tags | 25,311 | 6,090 unique |

| tagged documents | 4,992 | 5.1 % |

*the remaining 2,422 could not be retrieved

| Statistics per document | Mean | Std. dev. |

|---|---|---|

| bookmarks | 1.85 | 47.68 |

| tags | 0.26 | 1.80 |

| PageRank | 3.13 | 1.66 |

Information Sources

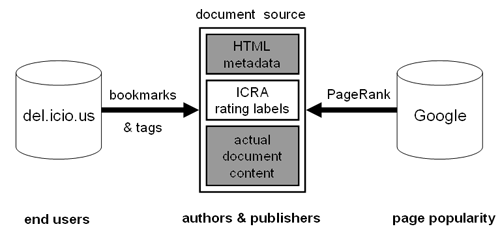

For each web document in the sample, I retrieved the actual document from the WWW plus metadata from the social bookmarking service Delicious.com, from the Internet Content Rating Association (ICRA), and from Google as shown in figure 2. This means DMOZ100k06 provides the following types of information about a web document:

- metadata by authors/webmasters: ICRA content labels

- metadata by readers/visitors: del.icio.us bookmarks and tags

- “popularity”: represented by Google PageRank

- technical infrastructure: average HTTP response time of web server

Tools of the Trade

I implemented custom software tools which relied on the services’ official APIs where possible and fell back to alternative techniques for situations where the APIs did not provide the required functionality:

- Python: custom scripts and modules, most notably my Python API for Delicious

- Perl: small script using WWW::Google::PageRank

- Unix swiss army knife featuring grep, sed, awk

Data Format

The corpus is in XML format with following structure.

Documents

Each web document is represented as a <document> with the following attributes:

- url

- URL of the web document

- users

- Number of del.icio.us users who have bookmarked the URL

- tags

- Number of del.icio.us common tags for the URL, i.e. the most popular tags (0...25). For a description of common tags, see section "Tags" below.

- pagerank

- Google PageRank of the URL

- icra

- ICRA label status of the URL with the following possible values: red (no label(s) or only incorrect label(s)), yellow(correct label but only partial coverage of document content, i.e. some elements such as images or banners are not covered by the label), green (correct label, full coverage of the document content), error (error during label tests such as server errors or network timeouts)

- http_response_time

- Mean HTTP response time of web server serving the URL; averaged over several runs during different days/times with DNS caching applied

- <tag> elements

- del.icio.us common tags in detail (see below)

Note that the ICRA label test checks only the syntactical correctness of a label, not whether the content of the URL actually matches the label’s description! For a semantical analysis of ICRA labels, read my paper Web Page Classification: An Exploratory Study of Internet Content Rating Systems (PDF).

Tags

Each <document> may contain up to 25 <tag> elements, which represent the Delicious.com common tags for the

document. Delicious.com limits common tags to 25 per URL, which means that the list of all tags attached to a

document might actually be (much) larger than 25. The reason for retrieving just the common tags of a document instead

of all tags was technical restrictions. Note that the number of <tag> elements of a document is always equal to the

value of a <document>’s ”tags” attribute (see above).

- name

- Name of the tag, e.g. "news" or "delicious"

- weight

- Weight of the tag (1...5) as returned by Delicious.com; higher values denote higher popularity of the tag

Example

<documents>

<document url="http://www.example.com/" users="33" tags="9" pagerank="8" icra="red" http_response_time="0.541234">

<tag name="delicious" weight="1" />

<tag name="dmoz100k06" weight="4" />

<tag name="dmoz" weight="5" />

<tag name="google" weight="1" />

<tag name="icra" weight="1" />

<tag name="information retrieval" weight="1" />

<tag name="research" weight="3" />

<tag name="web2.0" weight="1" />

<tag name="viIsBetterThanEmacs" weight="1" />

</document>

...

...

...

</documents>

Download

Legal information

By downloading, you acknowledge that:

- The data has been compiled for the purposes of illustration for scientific research.

- The copyright holders retain ownership and reserve all rights.

Download: DMOZ100k06 data corpus (version without web document sources)

How to reference

When you use DMOZ100k06 for your own research, please use my corresponding paper as a reference.

Feedback

Comments, questions and constructive feedback are always welcome. Just drop me a note.

Related Research Data Sets

- CABS120k08 (published 2008)

Large research data set about Web metadata based on a sample of 120,000 web documents with data retrieved from the Open Directory Project, the AOL Search query log corpus AOL500k, Google PageRank, Delicious.com/Yahoo!, and anchor text from incoming hyperlinks